There’s a lot of buzz around AI in edtech. But often missing from the conversation—and the tools themselves—is the voice of the educator. Does the tool respond to teachers’ pain points? Does it help elevate teachers’ instruction? Does it provide a pedagogically sound and active learning experience for students? Does it offer teachers insight into students’ challenges? When it comes to subjective subjects like writing, these questions become even more salient and difficult to address within an edtech tool.

We sat down with Peter Gault, Executive Director and co-founder at Quill.org, to discuss how his organization is addressing these challenges. Quill’s AI-powered literacy tools automatically grade and serve feedback on student writing, enabling students to revise their work and quickly build their skills. Quill has been working with AI long before it became a mainstay of the news cycle. Gault shared how Quill is managing the transition from predictive AI to generative AI; why writing is such an important skill; the process of constructing quality feedback; and why we need to engage teachers in the edtech development and implementation process.

What is Quill.org?

Quill.org provides free literacy activities that build reading comprehension, writing, and language skills for elementary, middle, and high school students. We offer a suite of online learning tools, as well as research-based reading and writing instruction in an accessible, open-source digital platform backed by a team of educators, designers, and engineers.

What inspired the creation of Quill? What challenges were you seeking to address?

Quill was born out of a passion for writing and a belief that writing is a superpower. The most valuable learning experience I had growing up was being able to do journalism and debate. Those experiences of having to articulate a viewpoint on an issue or defend ideas built critical thinking skills. These are powerful skills for young people to have.

With Quill, we tried to apply that vision of teaching writing as critical thinking to millions of kids. In fact, the first version of Quill was a debate videogame where students could debate issues and be rewarded for using precise evidence or coming up with logical claims.

We found that the product worked pretty well for many wealthy and privileged students, but not so well for many others—especially students from low-income schools who lacked foundational literacy skills. When you look at the most recent NAEP data, for example, 88% of all low-income students are not proficient with basic writing. We decided to pivot to think about how to address the really big needs that students had in the classroom today.

What approach did Quill take to helping students develop those more foundational literacy skills that are key to writing?

Peg Tyre’s piece in The Atlantic called “The Writing Revolution” became a frame of reference for us. It articulates a really effective methodological approach to writing, where students focus on building strong sentences and then chunk paragraphs.

That’s a really common gap for students. Students often go from very basic writing in elementary school to being asked to write five-paragraph essays in middle school. But they don’t have a lot of explicit practice with building a thesis or using evidence to support a claim.

Over the last ten years, we’ve worked on creating really great sentence-level writing practice with pedagogically sound tools. We’ve launched increasingly sophisticated features—combining sentences, using appositives, connecting ideas with conjunctions—to help students clearly express their ideas in writing. In the future, we’d like to work on providing feedback on paragraphs and essays, grounded in a pedagogy that’s focused on critical thinking through writing.

That feedback piece is really core to Quill’s approach. What does feedback look like in Quill? In what ways does quality feedback help students become better writers?

If I were to describe Quill in one word, it’s feedback. Our theory of action is that when kids get really good feedback on their writing, they’re better able to learn and build their skills. Typically there isn’t a lot of writing that happens in the classroom because it’s so time-intensive for teachers to give that feedback. Instead, you often have students writing journal entries that no one reads, especially in Title I schools where teachers often don’t have time allocated for reading papers and offering feedback.

As a result, there ends up being little reason for students to try to explain their ideas, and there are few opportunities to revise and improve those ideas. Kids often end up viewing videos or answering multiple-choice questions rather than engaging in active learning around writing. At Quill, we were looking for a way to help teachers with that feedback loop since it’s so hard to do manually.

There are cases where entire schools have emphasized writing feedback. For example, the school featured in The Atlantic article was incredibly successful with this work largely because the principal emphasized that every teacher is now a writing teacher. It was not just ELA teachers. Social Studies, Science, and Gym teachers were all writing teachers. They integrated writing and feedback into all of their lessons. But that is a huge commitment that most schools aren’t able to make.

In what ways is Quill able to help teachers in schools where there isn’t that intensive focus on writing and feedback that you’ve just described?

Technology can play a big role in creating this feedback loop, which is especially helpful for schools that lack resources. From the beginning, we focused on students attending Title I schools. Unlike many other edtech products, Quill was always designed for classroom use. The vision has always been that the teacher drives teaching and learning, and Quill is available to supercharge their instruction.

We have around 900 activities that span grades 4-12. Each activity takes about 10-15 minutes. There’s a lot of value in high-impact writing activities that give you immediate feedback. But we would never say that Quill is a tool that students should spend all day on. Instead, we believe that a teacher can use Quill to provide practice on a key lesson or skill that the teacher is teaching.

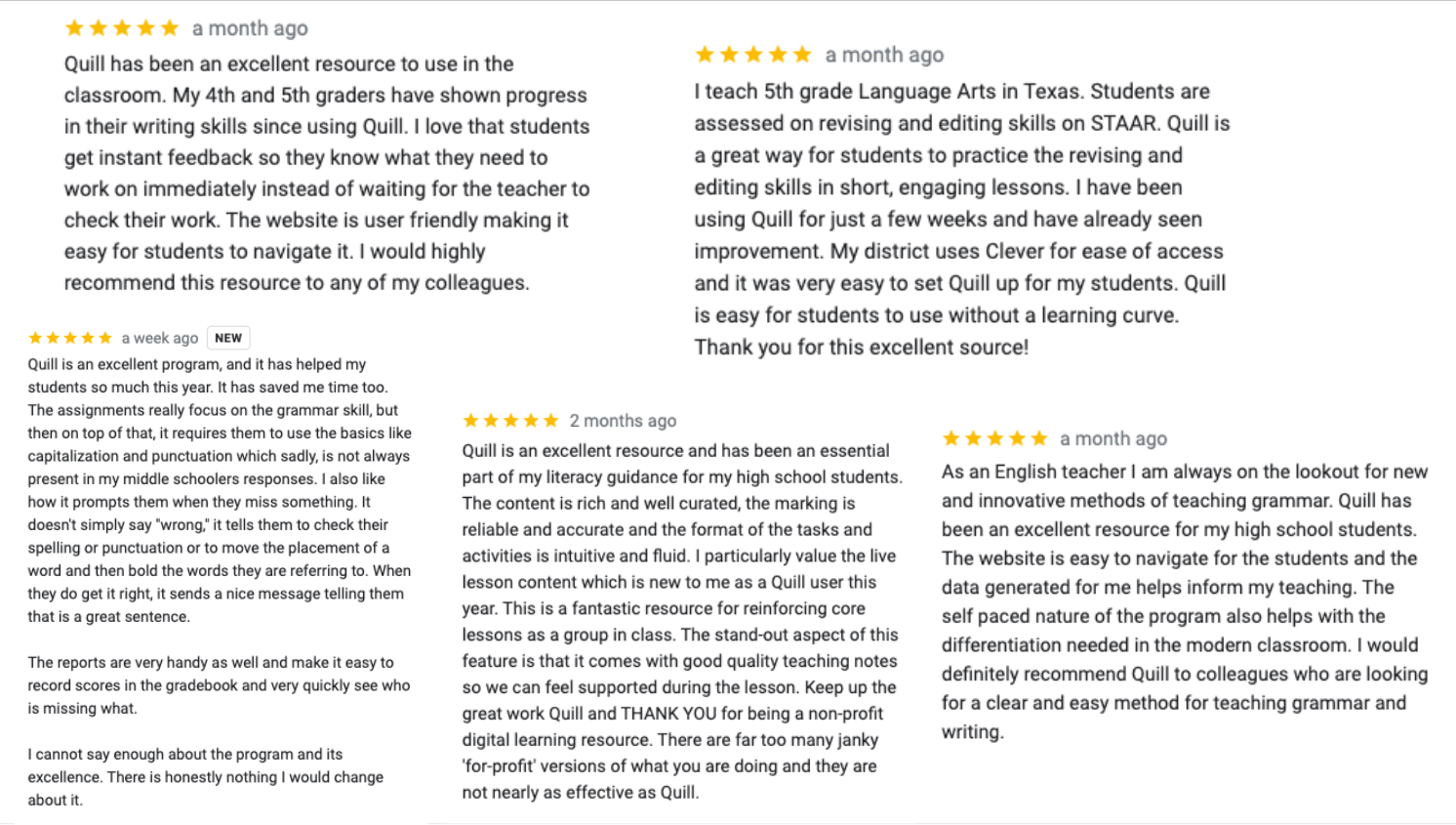

Quill enables students to have an active learning experience, but in a way that can scale. We now serve about three million kids a year across the country, with students coming from about 12,000 schools in the U.S. That’s about 10% of schools across the country and about 50,000 teachers. We’re dedicated to all of our content being free forever. We see that teachers really trust us as a result.

How has your work to serve teachers and students been accelerated by the recent advances in AI? In particular, you’re moving from a predictive AI model (which forecasts future outputs based on past data) to a generative AI model (which creates new outputs resembling the original input using machine learning) to provide feedback. Why are you making this change?

We’ve been building AI models since 2019 and have deep expertise in predictive models. In that approach, we’re looking for some sort of pattern in the writing that we can then use to give feedback. To build that model, we have to capture thousands of sentences and look for the thing that we want in the writing—for example, asking students to use evidence to build a claim.

Rather than creating a generic system for every single student writing prompt, we built a unique dataset of all the possible pieces of evidence a student could provide. Then we categorize that evidence as “strong” or “weak.” When a student uses weak evidence, we give them actionable feedback and coaching to help them revise their writing.

This process was really effective and very scalable in the sense that it could help millions of students. At the same time, it’s very labor-intensive for us to have to build a unique model for every prompt. We had to collect and categorize thousands of responses. We’re currently doing this at the level of individual sentences through our Quill Reading for Evidence tool, but it’s an exponential increase in complexity as you get into longer pieces of writing, such as paragraphs or essays.

With generative AI, we can leverage the vastness of large language models but still build out our own custom rubrics that specify what strong writing looks like for a particular problem. This, in turn, allows us to give much better feedback. What are the exemplars? What are the responses that need feedback? It mirrors some of our work at building models, but it’s more aligned with how a teacher grades writing today, when they’re able to specify guidelines and then offer feedback based on those guidelines.

We’re very excited to have some experienced partners helping us with this work. For example, Quill was recently named a member of Google.org’s new generative AI accelerator program, allowing us to work with Google engineers on this project.

You’re thinking a lot about how to use AI responsibly. What can the edtech field learn from Quill’s approach?

One thing that stood out to me at the recent ASU+GSV conference was that a lot of the AI work happening is not being driven by the education community. It’s being driven by technology folks who are interested in education, but who don’t have any formal sense of pedagogy. A lot of people at the conference were promising that their AI can do anything. It can look at essays. It can look at paragraphs. You can send it work samples. You can send it portfolios. You can send it any piece of writing to the AI and it will say something back to you. But I wonder, “Is AI feedback really helpful for student learning? Is that feedback actionable?”

You’re seeing a lot of low-quality AI products that work to some degree, but they’re not really specifically designed for students in a way that really helps them be effective in their learning. Dan Meyer has been writing pretty extensively about some of the issues with generative AI and in education. Dan’s core idea is that many of these systems just repeat the same feedback in response to very different student answers. For example, there’s a real tendency for AI models to simply reveal the answer to kids. It will say, “That’s not right. Have you thought about blank?” The blank states the answer explicitly. With such products, there’s a risk of harming students.

To do AI well requires extensive customization. We need to be very intentional about what good writing looks like, what success looks like. Unfortunately, clearly defining success in a way that allows the AI to pattern match isn’t very common in the field right now. And too often when they do exist, those definitions of success aren’t coming from educators.

How is Quill’s approach different from these other products that you’ve described?

At Quill, we’re building a custom rubric for every student writing prompt where we define up to 100 examples of exemplary writing and where the writing needs to be improved. For every single prompt, we are doing this manual work of having it define what good and bad writing looks like. That’s really important because what’s a good answer for a fourth grader is different from an eighth grader or a twelfth grader. It’s not effective to ask the AI to make its own judgment calls about the writing. It’s much better to create your own judgment calls about what’s good and bad writing, and then let the AI do the work of pattern matching off of those judgment calls.

Quill isn’t trying to create a generic AI system. The manual customizations that we’re building allow us to give high-quality feedback. Virtually no one else in the space is taking this approach today. But it’s critical because it’s really damaging for students—especially low-income students—to get low-quality feedback. We all know how frustrating it can be when Siri doesn’t understand you. Imagine a sixth grader trying to use a chatbot that doesn’t really understand them. It can feel really bad. On the other hand, extensive customization can result in really great learning.

The flip side of this is evaluation. You need good tooling to see whether the feedback is working and where it’s not working as well as it should. That tooling doesn’t exist off the shelf in the way that it should right now. It really requires building your own, and that’s a lot of work. With so much writing taking place on Quill, we can look at a series of metrics. Is feedback repeated? How often do students advance their writing forward? How often do students need to revise their writing to get to an adaptive response? Every single prompt has its own data set. Those evaluation systems allow us to really see when it’s working and when we need to improve it. In response, we retrain the AI to ensure that the feedback is always really high quality. We can also give teachers formative assessment data in a way that allows them to understand learning.

Educators are crucial in all of this work. How do you recognize teachers’ perspectives, approaches, and needs in Quill’s design and implementation?

When it comes to AI development, working with teachers is not a nice-to-have. It’s absolutely essential to being successful with this work. An explicit principle of our approach to AI is developing with teachers. We have a group of more than 300 teachers in a Teacher Advisory Council (TAC). Every single learning activity goes first to the Council. We ask them, “Can you work with us to understand how this is supporting your students to develop this particular skill? Where is it working well, and where does it need to be improved?” Specifically, we look for teachers from Title I schools because we recognize that their needs and context may be different from teachers in wealthier communities; it’s that audience we want to serve. We compensate TAC teachers because we recognize that we’re asking them to share their time and expertise. Educators’ insights have been absolutely critical.

With AI, getting from good to amazing requires iteration. That process is time intensive because we go through multiple cycles of revision and iteration for every single activity, working with teachers. That’s how we’re able to create such great feedback for kids. We’re trying to mimic great teacher feedback, so actual teacher feedback needs to drive improvements to the system. Again, if you don’t have that feedback loop in place, it results in a much weaker learning experience.

What’s next for Quill, especially as you consider the possibilities and challenges of moving to generative AI?

At the moment, we’re in the switchover process from predictive AI to generative AI. We’ve built about 300 AI models, and the plan is to throw out all those models and to rebuild everything. That requires enormous resources.

At the same time, having this new infrastructure in place unlocks our whole roadmap around more advanced learning strategies for students. We can offer better feedback and we’re not bound to sentence-level feedback. We can also teach other skills, like how to ask really good questions. From there, we want to do things like encourage students to build arguments and engage in Socratic dialogue with a debate bot. We could also help students with longer-form writing. In parallel, we also have the development of our Social Studies and Science offerings—our first offerings outside of the English classroom.

In order to do those things, we have to switch over to generative AI. That means wrestling with the technology and figuring out some tricks for deploying it. For example, we learned that we could move the prompting process along if we used all capital letters or exclamation points.

More importantly, at Quill and as a sector, we need to think about how to use technology that is very powerful but doesn’t have the level of reliability we want. Are there ways to coerce the model towards closing that gap? Or do we need to use a more powerful model? Over time that will be less of an issue, but right now, we’re still in an in-between period. At Quill, we’ve tried to be very thoughtful about approaching these questions and prioritizing the voices of the educators who are using this product with their students.

###

Peter Gault is the Founder and Executive Director of Quill.org, a nonprofit organization dedicated to helping millions of low-income students become strong readers, writers, and critical thinkers. Quill uses artificial intelligence to provide students with immediate feedback on their work, enabling them to revise their writing and quickly improve their skills. Quill has enabled nine million K-12 students in the United States to become stronger readers and writers by providing students with AI-powered feedback and coaching on more than two billion sentences. Teachers are provided with free access to Quill’s research-based curriculum, and the open-source organization works with academic researchers across the country to design and build its technology and curriculum.

Peter leads product strategy, fundraising, and strategic partnerships. He started designing educational software tools and games in high school and studied philosophy and history at Bates College, with a special focus on debate and critical thinking. Quill has been recognized by Fast Company’s Most Innovative Education Companies, Bill & Melinda Gates Foundation’s Literacy Courseware Challenge, and Google.org’s AI for Social Impact Challenge.