Thirty years ago, former head of the National Bureau of Standards and chief scientist of IBM Lewis M. Branscomb asked “Does America Need a Technology Policy?” Even then, technology policy had been underway for decades, yet the questions he posed in 1992 about the role of government and the private sector in our public policy landscape endure.

The breakneck progress of generative AI and the unchecked accumulation of wealth by private technology companies exploiting the lack of regulation seems to be condensing into fresh momentum for comprehensive tech policy. More and more states are introducing their own data privacy laws, the White House and different sections of the government are actively involved in AI regulation, and the FTC is taking a stronger stand against anti-competitive practices of tech giants. Indeed, responding to the power disparity between the producers and consumers of technological innovation is one of very few issues that receives bipartisan support in a very divided political landscape.

However, designing effective, forward-looking technology and technology policies is difficult and compels us to recognize simultaneous truths: 1) technology and technology policy are never values-agnostic, (2) better tech policy (like better technologies themselves) emerge from processes that include more diverse perspectives and lived experiences, and (3) these processes require the challenging but necessary work of sifting through values tensions and creating spaces for pluralistic values to coexist.

Policy is driven by people, and people cannot be divorced from their values. It might come as a surprise then that the multiplicity of values in our society has so far received little direct attention. A better path forward for tech policy would be to acknowledge that there is no such thing as values-agnostic policy; further, the illusion of such agnosticism obfuscates values that may be in conflict with the public interest. This dynamic allows for an entrenchment of the interests of those with power and further marginalized historically overlooked communities.

At Siegel, our work explores how we, as both a foundation and a member of the responsible tech ecosystem, might increase the meaningful participation and agency of communities to shape the development, deployment, use, and stewardship of their digital infrastructure. In light of renewed conversations around technology and technology policy, it has also led us to meaningfully explore how we can empower communities to shape policies and design digital infrastructures that best reflect their values and aspirations. We ask: How might we identify values that ought to guide the design, development, and deployment of technologies that affirm our rights and promote the public interest, today and for our future?

Towards this aspirational goal, we must start with a shift in discourse around technology and technology policy from values-agnostic to values-driven.

Defining Values

Value Sensitive Design (VSD) defines values as “what is important to people in their lives, with a focus on ethics and morality.” However, specific values are rarely universally held. Our moral beliefs, and consequently our values, are shaped by various factors and circumstances, and it is uncommon to see complete alignment between individuals or communities.

Values also exist at multiple levels in our society. Individuals carry values which are often applied in their life and decision-making. We also have community values and at larger levels of social organization, such as the democratic ideals we claim to embody as a nation. In addition to the lack of value alignment between individuals, there are also differences in values between individuals and their communities, and between different communities.

Values are often in tension

This definition of values acknowledges that values are often in tension, rather than static and existing on their own. In the technology context, values show up as a range of concepts such as privacy and security, efficiency and sustainability, or as mantras such as “move fast and break things.” The idea that values are embedded in our technology and the policies that surround them is not a new one, nor is the idea that values are often in tension. In a democratic society, these value tensions are valuable and necessary. They create an opportunity to articulate and deliberate on the principles that underpin our technological advancements and societal structures, and make informed decisions about reasons for design and what policies aim to protect.

However, advocates for value-informed tech policy are challenged by emerging technology that outpaces our ability to think together, navigate value tensions, and influence tech policies thoughtfully. The Collingridge dilemma draws attention to this core challenge in the regulation of new technologies – if you regulate too early, you might not understand the technology enough to make good rules; but if you wait until you have all the information, it might be too late to make effective changes. Policymakers are routinely outpaced by technology innovation which makes for gridlock, tech illiteracy, and a mismatch between the tools we need and the ones we have.

Lagging tech policy is in part a result of the difficult, but necessary, work of multi-sector collaboration. In this climate, our social values and democratic ideals provide a solid foundation for the principles that can guide both our technology and associated policy development.

Moving from Values-Agnostic to Values-Driven Policy

Moving from values-agnostic to values-driven policy will not be easy, but is necessary to produce a world that strikes a healthier balance between innovation and inclusion. That’s a world we at Siegel and many of our partners aim to produce. We think these are good starting points:

1. Acknowledge that values exist

When shifting from a “values-agnostic” to “values-driven” policymaking, we need to acknowledge that values drive decision-making. And certain values that inform decisions can be tension with values that we see as necessary to a healthier relationship between society and technology. Without naming the values that drive decisions, we lose the ability to trace and interrogate whose values are being centered in this process, and therefore who wields power in decision-making.

2. Use intentional processes to surface and engage with values

There is a growing acceptance that greater participation can enhance our regulatory practices and encouraging signs of an emerging philosophy that treats values as central to the policy making process. This creates an opening for our diverse communities to bring their lived experiences and principles to the policymaking exercise.

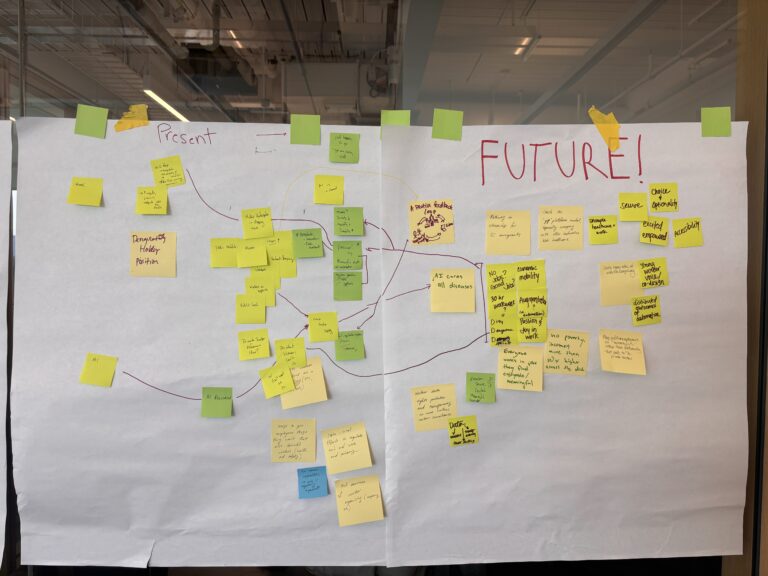

Operationalization of values into practice necessitates bringing concepts into action. When a multiplicity of values are brought to the table, special processes are needed to facilitate the ethical evaluation, integration, and prioritization of these diverse perspectives. On a practical level, this means giving values alignment its own step in the policy process. It means using deliberate activities, such as cultivating space for a community to identify shared values, name values in tension, and think together toward actionable steps within the confines of value tensions. These practices can also function as a learning space, and promote an individual’s sense of responsibility and belonging to the community.

Such a shift in approach offers technology regulators and policy makers an opportunity to appreciate the heterogeneity of our communities and encourage a vision of values pluralism – recognizing that sections of the society will have competing and/or conflicting values needing accommodation. Just as our democratic society invites a variety of ideas and thoughts to thrive, our policies must acknowledge the range of values and commit to public participation and reflection to incorporate the appropriate ones in our regulations towards pursuing public interest and social justice.

For its part, the public can advocate for a greater emphasis for a values discourse in our policies and its formulation process. Engaging these tensions meaningfully provides an opening to build a muscle for dialogue and collective action.

3. Revisit values that are no longer producing their intended outcomes

Tech policy researchers, responsible technology advocates, and social scientists provide useful frameworks for thinking through the myriad values that ought to guide tech innovation and regulation. These frameworks1 are useful as we decipher what values we ought to forefront in this work and what value tensions will arise.

As we look back on the desired outcomes that have historically driven our tech policy (i.e. national security, industrial competition, and economic prosperity) we can see how the values of the policy-designers led to choices about how to achieve those outcomes (ie. the loosening of regulations and cultural supremacy of innovation for innovation’s sake) – whether or not they named such values.

While the desired outcomes have not changed, many of the corresponding policies are not meeting urgent needs. Existing attempts at regulation have offered us a moment to reflect on which values are now best suited to drive technology policy.

Value Tensions in Action

In the end, policymaking requires that we tip the scale towards one value over another. Over the course of the coming months, we will explore practical case studies that showcase how real technology organizations wrestle with conflicting values in their work, and further untangle some of the threads raised in this piece.

If we want public interest technology and technology policies, we have to involve the public in their creation, a prospect that will inherently weave together diverse values, perspectives, and lived experiences – and often lead to drawing out values in tension. When developed carefully, these technologies have the potential to enhance aspects of our lives and strengthen elements of our democracy.

Are there core values driving your/your organization’s work and what tensions have you experienced an instance of tension in your values? Tell us about it here.

This piece was authored by Madison Snider, Research Associate and Robin Zachariah Tharakan, Research Fellow.

1All Tech Is Human’s 10 Principles: https://alltechishuman.org/principles; Design Justice Network’s Principles: https://designjustice.org/read-the-principles; Tech Equity Collaborative’s Values: https://techequitycollaborative.org/about/