What does artificial intelligence mean? It might bring to mind sleek, futuristic devices that learn to anticipate your needs, or tools for managing complex systems like cities, public health, or supply chains. Not for me. I imagine the old board game, Mouse Trap. In that game, players navigated a series of convoluted, interlinked devices: a boot kicking a bucket, knocking a marble down a chute to trigger a falling basket. Sometimes the traps worked, but the game’s rules revolved around the assumption of failure: odds were high that something in that system would go wrong, and your mouse would live to roll the dice again.

AI is a vague term. It’s been used to describe everything from complex neural networks and machine learning to simple algorithmic sorting. Most commonly, it’s evoked to create the illusion of a single, unified process: an intelligence. But beneath the surface of slick interfaces, AI more closely resembles a game of Mouse Trap than the sophisticated science fiction workings of the Starship Enterprise. Artificial intelligence is not an individual system. Rather, that “intelligence” emerges from the interactions between pieces of many systems, typically driven by a combination of people, machines, sensors, code, and data. It’s common for these systems to obscure human contributions – hidden labor, or “ghost work,” that pushes these so-called automated processes whenever they get stuck. When humans aren’t involved, automation can introduce additional traps to those facing precarity, exclusion, and risk.

When we look at AI through its components and relationships, we can identify discrete but overlapping physical, social, and digital infrastructures. For example, your smart device may interact with you through the physical world by turning on some lights or music. It may connect you to your social networks by distributing a post. Both of these actions are enabled by digital processing capabilities. It’s tempting to view these infrastructures as isolated pieces, but it’s more accurate to consider AI as what emerges from the orchestrated interaction of these pieces. Decisions made in one sphere are embedded and reinforced as they move through the others. The interactions of these systems creates a sense of a unified but dynamic intelligence, while smooth interfaces present the illusion of a seamless flow. That makes it harder for us to see what’s really at work – and at stake.

Consider the common metaphor of AI as a data pipeline. This suggests a linear process: data goes into a system such as a data center, where it is analyzed and turned into a decision (or product). Such pipelines are certainly parts of AI systems. Yet, this physical metaphor excludes crucial impacts and influences that shape and result from those decisions. In fact, even a literal pipeline isn’t isolated in such a straightforward way. Whether we mean oil or data, the pipeline is a slice of a larger process of treatment, storage, and distribution.

The strength of the multidimensional view is in how it examines relationships between infrastructures, systems, inputs and products that are more complex than linear metaphors allow. That’s important, because defining AI as a linear digital process is counterproductive to eliminating its potential harms. It assumes that data arrives ready to be processed, that it reflects desirable social and economic conditions which we seek to perpetuate, and that the output of these algorithms stops at the production of that decision or product.

Looking at the history of complex systems, we find a useful heuristic from the cyberneticist Stafford Beer: The Purpose of a System is What it Does. In other words, the intentions that motivate the design of these systems are irrelevant to the impacts they actually make. For example, industries may design algorithms to allocate resources based on vast sums of data, only to find that these systems amplify risks to particular users or communities. Looking at the pipeline – rather than its effects – suggests that a system is “working” even if its secondary effects are damaging.

Looking at the results and behaviors of systems through a multidimensional lens is necessary for holding systems accountable for the conditions they create, sustain, or hinder. As we’ll see, this works for understanding possible harms, as well as the possible failure points of otherwise carefully designed tools. A multidimensional approach, emphasizing digital, physical, and social aspects of AI infrastructure, can reveal such frictions.

The Multidimensional View

[The Multidimensional view is] a new understanding of physical, social, and digital infrastructure that recognizes the interdependence of these three dimensions. Any decision or change in one dimension of infrastructure must consider the other two dimensions in equal measure. By doing this, we can design and maintain infrastructure that’s responsive, resilient, and cost-effective and creates further opportunities for society.

Digital infrastructures are everywhere. It is the data and the software that analyzes, manipulates, and translates that data. It’s the hardware that drives that software, the sensors that collect information, and the cyber-physical systems – from robots to automated vehicles – that make decisions based on that data. There’s a dense connective fabric between them, too, from cell phone towers to ethernet cables.

AI is part of that digital infrastructure. It is ethereal and disembodied, residing in code and hardware. AI is a human-encoded tool designed to evaluate data and enact decisions through websites, platforms, networks, and computer systems. That automated data evaluation and decision making reflects past human decisions, priorities, and conditions. Rather than seeing this “digital infrastructure” in isolation, however, a multidimensional approach sees how AI is intertwined with social and physical infrastructures. The infrastructures contribute first to how AI is designed and implemented, and then to ways its decisions are applied.

Articulating the social factors of these encoded decisions, and the behaviors that are shaped through their interactions of people, digital tools, and our built world, is essential for articulating the impact of AI systems.

AI and Social Infrastructures

Social infrastructures are infused into the data that AI analyzes and models for its predictions or insights. Data is often collected by the most accessible sources, such as internet databases, websites such as Wikipedia or Reddit, or historical data (often collected for different purposes). Important context is often removed to make this data usable to a particular system. Even seemingly direct uses of data such as energy use, or data about data storage, reflect social infrastructures that shape what we ask, how we ask it, what knowledge and information we prioritize, what we build to measure it and how we use what we learn.

One example of social infrastructures inscribed into data is the racial bias in facial recognition software. This software can be based on models that under-represent people of color. For example, one of the largest datasets for image recognition training was the FFHQ dataset, a collection of thousands of images of faces from the photo sharing website Flickr. Looking closely at the FFHQ model revealed that the images in the training dataset were overwhelmingly white. As a result, the system was less precise with recognition of people of color, and up to a 100 times more likely to misidentify black men.

As this racial bias moved from social infrastructure into digital infrastructure, image recognition software encoded it into stable decision-making processes. Though the data created models that were less accurate in understanding the faces of people of color, the data was nonetheless used in image recognition systems by designers who either did not understand, or chose to ignore, the social risks. It should come as no surprise that image recognition data used in surveillance systems are also known to create higher rates of false identifications for people of color, with consequences including false imprisonment.

These interactions between the social and digital spheres must be better understood to make better use of these systems – or to decide not to use them at all. A judge facing a defendant who was nowhere near the scene of a crime should not be swayed by a faulty algorithm offering a 90% likelihood that he was. Poorly developed digital infrastructures can incentivize a judge to err on the side of a machine rather than the human. When we prioritize digital infrastructures over social infrastructures, we risk inflicting enormous harm.

AI and Physical Infrastructures

The multidimensional approach is best understood as a Venn diagram, rather than distinct pieces exchanging information. Physical and social infrastructures overlap with AI, for example, in the physical placement of surveillance cameras and the data that drives those decisions. If cameras are assigned to neighborhoods where police are frequently responding, the surveillance camera will impose a new feedback loop of police calls, reinforcing the patterns reflected in the data: measured crime rates do not only reflect rates of “crime,” they also reflect rates of response to reported crimes.

The outputs of AI projects into the physical sphere can be positive when physical-social dimensions are integrated into AI-informed, or algorithmic, projects. Transportation infrastructure is a good example: where do we place new bus routes, and how do we evaluate data to guide those decisions? The deeper we probe into data, and the more socially inclusive our decision making process, the better the output is likely to be.

This was the subject of an early AI-infrastructure furor in Boston, when MIT designed an algorithm to redesign school bus routes. These algorithms were optimized for “equity,” factoring in essential changes to existing routes: better start times had previously been reserved for the wealthiest neighborhoods. That left low-income neighborhoods with a greater burden to wake up early, which is tied to lower academic performance and even health incomes. The resulting plan distributed these start times more equitably, and would save the school district nearly $5 million by cutting 120 buses from the fleet (ibid). Nonetheless, the school board and researchers faced a backlash to the change – among wealthier parents, but also among representatives of lower-income neighborhoods. The plan was scrapped.

In this case, even a seemingly faultless AI system was being integrated into physical infrastructure (a transportation network) without acknowledging the challenges posed by social infrastructures – particularly, questions of power and politics. The algorithm had sorted out these improvements to the system “on paper” in less than 30 minutes. But the necessary work of cultivating community support, recruiting participants into its planning and implementation, and fleshing out objections through political engagement, was all sidestepped.

If we return to Beer’s adage – that The Purpose of a System is What it Does – the “purpose” of this algorithm was to activate a social backlash that eroded inter-community trust. Without addressing, and potentially challenging, the complex reality of who held power in these communities, the intended system would never be deployed. AI as physical infrastructure cannot be used to paint over the cracks in social infrastructures.

Too often, algorithmic policies are deployed into neighborhoods with weaker connections to power and decision-makers. Flawed, harmful, and oppression-reinforcing algorithms, from surveillance systems to predictive policing, can be deployed into such communities with less scrutiny and oversight. Newspapers and selectmen may be less likely to take these concerns seriously, or face daunting levels of opacity when trying to understand what systems are even in place.

These gaps reveal the flaws of isolating social infrastructures from AI. Harmful tech can be deployed without detection until predictable impacts become impossible to ignore. But even the good of a hypothetically well-designed, well-intentioned system would be lost if the social realities, contexts, and politics of power are ignored.

Toward A Multidimensional Approach to AI

How might humans and machines learn, work, and build together? As the future is increasingly shaped by automated, cyber-physical systems – from smart cities to transportation to the justice system – it’s critical that we understand the interconnectedness of factors that shape an AI’s decisions and actions.

Treating data as inherently objective or unbiased limits our ability to move the world in new directions. If we uncritically embrace data that reflects social injustice, economic disparity, and institutionalized racism, then we will build machines that preserve that world, inscribing its products and conditions into automated decision making.

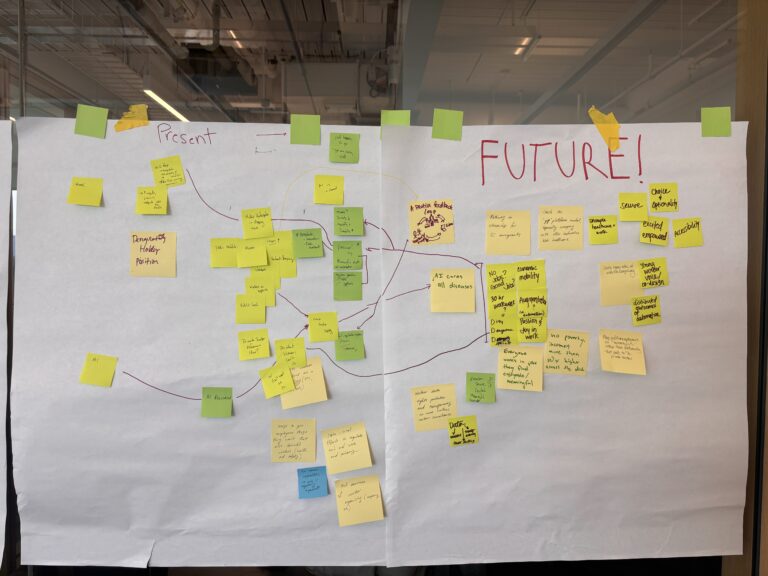

A multidimensional view of AI calls for interrogating the data we collect through social, physical and digital lenses, raising critical questions about how we apply it. It means moving beyond a “pipeline” view that treats data as an unproblematic, complete resource, taking a wider view of what we put in and how we measure what comes out. That calls for transparency, participation, and oversight in the ways systems are designed and implemented. Engaging communities into digital infrastructure projects early and often creates a resonance with social infrastructure that fuels successful projects.

Taking a multidimensional view of artificial intelligence technologies acknowledges that such “intelligence” is a product of many factors beyond the cloud servers and digital infrastructures. This intelligence needs to be defined by the communities who live, play, and work with it. Data is socially defined and deployed. It is shaped by, and shapes, our physical and social environments. How can communities use their power to shape – or refuse to shape – the algorithms they interact with and rely on? Data alone is not enough: we need to understand the roots and assumptions buried beneath that data, ask if it comes from a world we want to sustain.

This reflection was written by Eryk Salvaggio, Research Communications Consultant.